Best Selling Products

Hundreds of Millions "Evaporated" Just Because of a Deepfake Video Call: An Unexpectedly Sophisticated AI Scam

Nội dung

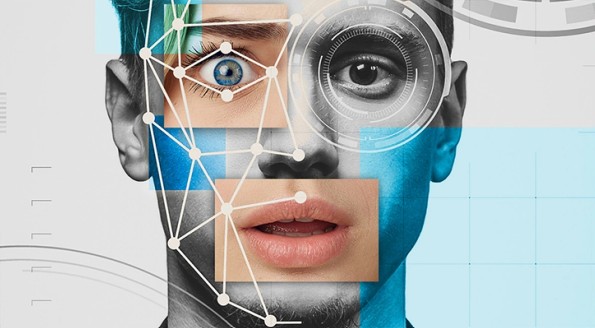

In the context of increasingly strong AI development, especially video creation tools like Veo 3 recently introduced by Google at the I/O 2025 event, internet users in Vietnam and around the world are facing a new danger: Trust can be "stolen" with just a few images and recordings shared publicly on social networks.

No longer the "spelling error" scams of the past, scammers now hide behind modern technology, hitting the psychology of trust and anxiety of victims to carry out property appropriation in a sophisticated, quick and convincing manner.

In the context of increasingly strong AI development, especially video creation tools like Veo 3 recently introduced by Google at the I/O 2025 event, internet users in Vietnam and around the world are facing a new danger: Trust can be "stolen" with just a few images and recordings shared publicly on social networks.

So how can you recognize, prevent, and protect your loved ones from such “high-tech” scams? The article below will clearly analyze the nature of deepfake scams, how they operate, and especially the survival principles to help you and your family not become the next victims in the volatile AI era.

1. AI and deepfakes: When fakes are no longer a joke

On May 20, 2025, at the Google I/O technology event, the giant Google officially announced Veo 3 - an upgraded version of the famous video AI tool. The highlight of Veo 3 lies not only in the ability to create hyper-realistic videos with high resolution but also in the ability to simulate voice and facial movements just like a real person, almost indistinguishable to the naked eye.

.png)

Although it has not been officially expanded in Vietnam, many domestic users have used VPNs, fake accounts and technical tricks to access, test and spread the products of this tool.

As a result, social networks began to be flooded with spam videos, false content, fake advertisements, and even videos that “defame” individuals and fake images of celebrities or government officials. Worse still, these tools quickly became weapons in the hands of bad guys to carry out sophisticated scams, targeting users’ psychology, trust, and habits.

2. When technology is abused: Fraud attacks trust

Worryingly, scammers no longer need the sophistication of professional hackers. With just a few videos from social networks, a few sentences in a livestream, or a clear face image from a personal Facebook, they can create a perfect “digital copy” of anyone: from relatives, friends to leaders and state officials.

The most common forms of fraud today are:

Pretending to be a relative and asking for urgent money transfer to “solve urgent work”.

Impersonating a government official to report a violation, requiring "quick fine payment" through a designated account.

Create video calls using fake images and voices to “verify identity” – then lure victims into a trap.

.png)

With this technique, criminals can easily gain the victim's trust in just the first few seconds of the video, which is extremely dangerous because many people, especially the elderly, tend to trust as soon as they see a familiar face and hear the voice of their "children and grandchildren".

3. Target audience

Not everyone is a target. But those who are often targeted are extremely vulnerable in today's digital environment:

Elderly people or retirees, lacking knowledge of technology, easily trust familiar images and voices.

Civil servants and public employees are busy and do not have enough time to verify information when receiving urgent calls.

Parents worry about their children, are easily influenced psychologically and make hasty decisions.

Social media users publicly post personal information, making it easy for criminals to exploit the data to create deepfakes.

These factors create fertile ground for sophisticated AI scams to flourish and cause hundreds of millions of dollars in damage in the blink of an eye.

4. Crisis of trust in the age of technology

Experts warn that the biggest danger lies not in the financial toll but in people's loss of trust in images, voices and information online.

In a society where “seeing is not believing,” people are becoming suspicious of everything from press releases to fundraising videos to even messages from loved ones. A crisis of trust is quietly spreading, directly affecting the ability to unite communities and protect individuals.

.png)

5. Golden rules to protect yourself and your loved ones

In light of this alarming situation, the most important thing is not to fight against AI, but to learn to live safely with it. Here are some useful recommendations from technology experts and authorities:

First , never transfer money based on a video call, even if the caller has the same face and voice as a close relative, friend or colleague. Current deepfake technology can accurately simulate every expression, look, and tone of voice with just a few simple data extracted from social networks or available videos.

This means that the image and voice you see on the call may not be real, and anyone can be impersonated. In the event of receiving a call with a request for money transfer, urgent loan or “urgent work”, people need to stay calm and carefully verify the information.

Some effective ways to verify:

Call back directly via previously saved familiar phone numbers.

Send private personal confirmation messages that only real people know.

Ask a third party to call and check again.

Ask a pre-agreed family “code” question.

Only when you have complete and accurate information from many different sources, absolutely do not transfer money based on emotions or because of psychological pressure in a few minutes.

.png)

Second , it is necessary to minimize the sharing of personal information on social networks, especially documents such as:

Clear face image, close-up or multiple angles.

Videos with real voices, livestreams, interviews or chats.

Detailed personal information such as date of birth, school, workplace, phone number, relatives...

Sensitive data such as family photos, children's photos, daily activities, specific schedules...

This content can be extracted, aggregated, and used to create fake videos, deepfakes, or virtual identities without your knowledge. This is a “free resource” that is easily accessible to criminals with just a few simple steps.

Solution:

Set old posts to private.

Do not disclose too much personal information, especially identifying data.

Limit the use of AI filters that recognize faces or trends such as "showing photos from young to old", "changing voices"...

Regularly check social media account access and security.

.png)

Third , always be wary of requests for money transfers that are “urgent,” “secret,” or emotionally charged. These are common scenarios in high-tech scams, as they exploit psychological factors to make victims lose their ability to think logically.

Some common examples:

“Mom, I had an accident and need money urgently!”

“You were detained at the airport, you need to pay the processing fee now!”

“We are the police, you are involved in case XYZ, need to transfer money to clarify!”

These situations often create time pressure, causing victims to fall into a state of anxiety, fear or pity, leading to hasty decisions without careful verification.

Principles against emotional traps:

Don't get caught up in the fast, rushed pace of speech.

Ask clear questions, don't hesitate to ask for verification.

Stop the call if you see any suspicious signs.

.png)

Finally , regularly remind, guide and support family members, especially:

Elderly: grandparents, retired parents.

Retired officials: often have little exposure to modern technology.

People who live alone or are not digitally savvy.

This is a group of people who are easy targets because of their trusting mentality and lack of ability to recognize fake images, fake voices or fake news online.

You can:

Host a talk, explaining in simple terms how deepfakes work.

Share real-life examples through press and illustrative videos.

Create a “household verification rule”: for example, always ask a “private” question that only real people would know.

Install software that supports deepfake detection or helps check video origin information.

This is one of the most practical ways to help your whole family improve their self-protection capabilities in an increasingly complex and unpredictable digital environment.

In an era where technology is developing at breakneck speed, the line between real and fake is becoming increasingly fragile. What we see through the screen: a familiar face, a familiar voice, even a video call may seem real but is not necessarily real.

AI tools such as Veo 3, deepfake videos, and synthesized voices... when falling into the wrong hands can become dangerous weapons to carry out increasingly sophisticated and unpredictable fraud. Just one minute of negligence can cause hundreds of millions of dong to "evaporate" without you having time to react. Worse, the consequences are confusion, loss of trust, and deep psychological trauma for victims and their families.

However, AI is not the enemy. It is important that we learn to recognize and understand the risks and equip ourselves and our loved ones with the skills to protect ourselves.