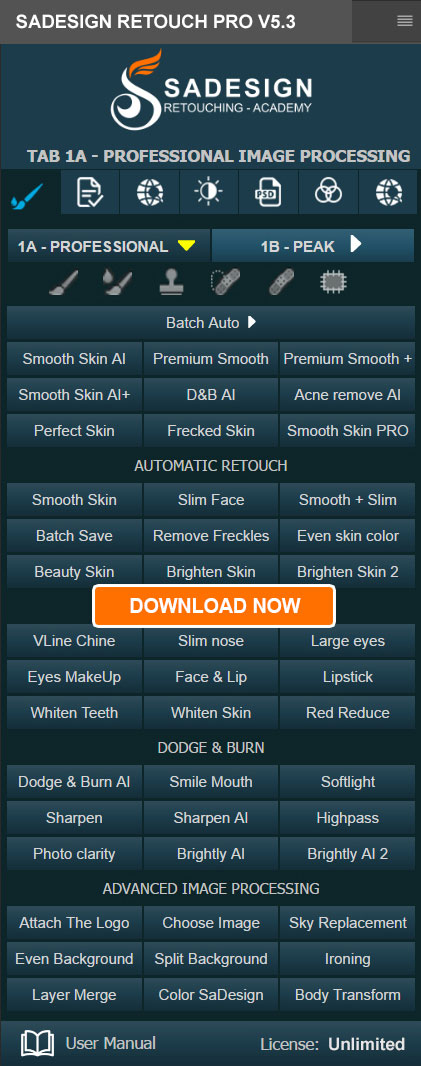

Best Selling Products

No longer a "cold machine", ChatGPT is now more caring than you think

Nội dung

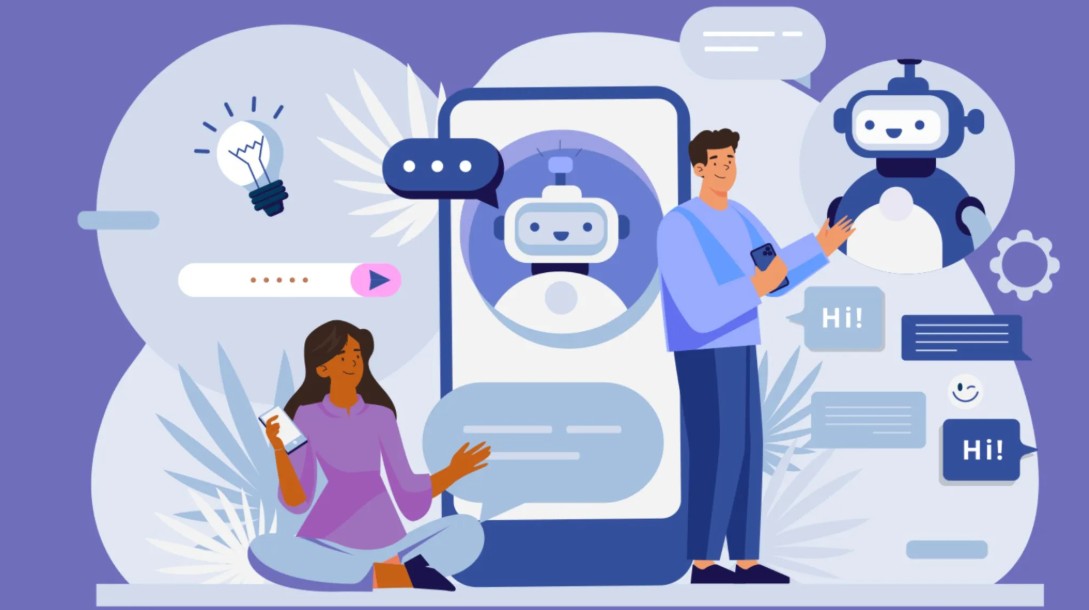

From now on, chatting with AI is no longer boring. ChatGPT can sense and respond more gently and delicately.

Throughout its development, OpenAI has always been a pioneer in creating AI tools that can “talk like a real person”. From the first model GPT-3 that amazed the world with its creativity to GPT-4, then the latest version GPT-5 is considered an important milestone in the journey to perfecting artificial intelligence. However, along with technical advances, users also began to clearly feel another change: ChatGPT became more and more accurate, but also increasingly cold.

Feedback from the community suggests that ChatGPT in recent versions has lost some of its “soul” that once made people love it. The responses are still coherent and logical, but lack empathy and the “human” feel in each response. Many have likened ChatGPT to a smart professor who says the right things but has no warmth in his tone.

In response to that backlash, OpenAI made a strategic decision: to bring emotion back into ChatGPT. This move is not just a technical improvement, but also an affirmation of OpenAI’s vision that the future of AI lies not just in intelligence, but also in emotion.

1. When AI learns empathy

According to the official announcement, the upcoming update will bring a series of major changes. ChatGPT will have a customizable personality , allowing users to choose how warm, formal, or intimate they want to communicate. Additionally, OpenAI will also launch an Adult Mode in December, allowing age-verified users to discuss more private and mature topics in a safe, controlled environment.

CEO Sam Altman shared that, in addition to expanding the ability to express emotions, OpenAI is also developing tools to protect the mental health of users , ensuring that freedom comes with safety. This means that OpenAI is not only “making AI laugh”, but also “caring”, “listening” and “stopping”.

.jpg)

This is a step that demonstrates OpenAI's humanistic vision: not creating AI to replace humans, but to accompany humans .

2. Why does OpenAI want ChatGPT to be “warmer”?

One question that arose immediately after OpenAI's announcement was: why does the company want to bring back emotions, when the core goals of AI are logic, reason, and efficiency?

The answer lies in the way users have interacted with ChatGPT in recent years. Initially, this chatbot was seen as a tool for information lookup and content creation. But over time, ChatGPT has gradually become a spiritual companion for millions of people. Many people chat with ChatGPT not only to learn or work, but to share their feelings, relieve stress or simply find someone to “listen without judgment”.

But when ChatGPT becomes too “safe,” the conversations lose their naturalness. Responses that are formulaic, avoid sensitive topics, or stay on the surface make users feel distant. A chatbot that is too cold, no matter how accurate, cannot make people want to confide.

OpenAI saw this not only through data, but also through direct feedback from the community. Many users expressed that they “missed the old ChatGPT,” a version that was a bit more naive, humorous, and “talked like a friend.”

But more deeply, restoring emotion to AI is also a long-term competitive strategy. While competitors like Google with Gemini or Anthropic with Claude are working to develop AI that can express emotions more naturally, OpenAI cannot stand aside. Users today do not just want “an answer tool”, but “a conversational character”.

.jpg)

A “warmer” ChatGPT increases engagement, expands its application in education, mental health care, consulting and customer support. In the near future, AI will not only be a work support machine, but can become a companion, a “digital companion” that understands and adapts to human emotions.

Furthermore, the warmness of ChatGPT has a profound social meaning: humanized AI. When technology knows how to listen, it helps people feel more respected, seen not only as users, but as individuals with emotions, with the need to communicate and be understood.

So, OpenAI's goal is not simply to “make ChatGPT cuter”, but to make AI more approachable, natural and emotionally profound so that each conversation is not just an exchange of information, but a real connection between people and machines.

3. Personality settings

In an upcoming update, OpenAI will allow users to customize ChatGPT's personality, a feature that is seen as an important step forward in the journey to personalizing AI experiences.

Instead of having just one response style, ChatGPT will have the ability to change its “tone” and “mood” based on the user’s wishes. Some people will want ChatGPT to be light-hearted and empathetic; others will want it to be serious, professional, or even dry.

Interestingly, this customization extends beyond language to emoticons and communication styles. Users can choose between “warm and friendly,” “serious and academic,” or “energetic, fun, and humorous.”

Technologically, this requires the language modeling system to have a deeper understanding of emotional context and tone modulation. This means that ChatGPT must not only choose the right words, but also “choose the right way to say them.”

The arrival of this feature also marks a shift in AI design philosophy. Previously, users had to adapt to how AI worked. Now, AI will adapt to how humans want to communicate.

.jpg)

But behind that freedom lies a question worth pondering: will personality customization cause AI to lose its own identity? As each user creates their own “ChatsGPT version,” are we creating countless virtual personalities, blurring the line between real and fake?

But on the bright side, this is the future of AI personalization. When technology serves humans at such a sophisticated level, it becomes more than just a tool; it becomes an extension of ourselves. A ChatGPT can be a teacher, a mentor, a creative partner, and a listening friend all with just a few emotional settings.

4. Adult mode

One controversial change is OpenAI’s announcement that it will launch Adult Mode in December. This will be the first time the company has allowed adult users to discuss adult topics in a controlled environment.

According to OpenAI, this mode is not intended to encourage sensitive content, but rather to open up spaces for honest and safe conversations. Many topics such as sexology, gender psychology, personal relationships or reproductive health, which were previously off-limits, will now be allowed to be discussed within appropriate boundaries.

The prerequisite is that users must verify their age. This is considered a necessary safeguard to distinguish adults from minors. However, this also raises concerns about personal data privacy.

To gain access, users may be required to provide sensitive information such as identity documents or facial recognition data. If this verification mechanism is not transparent enough or is abused, it can lead to personal data leaks.

OpenAI claims it will apply the highest security standards, only use verification information for age verification purposes and not store it long-term, but whether users will trust it or not is another story.

.jpg)

Socially, adult mode sets a new precedent: for the first time, a major AI platform recognizes that adults have the right to freely express their emotions and needs, as long as they are within a safe framework. If implemented effectively, this could be the beginning of an age-stratified AI model, where each group of users has their own space, tailored to their psychology and cognition.

On the contrary, if it fails to control, OpenAI could face a wave of criticism and serious distrust. Because the line between “freedom” and “overreach” in the AI space is extremely fragile.

5. The Line Between Control and Freedom in the AI Era

Behind the technical improvements, the story of ChatGPT being “warmer” or “freer” is really a story about control.

Users want the freedom to express their emotions, customize their experiences, and communicate naturally. But to achieve that, they have to accept a certain amount of privacy. As ChatGPT learns more about you, how you speak, what you think, whether you are sad or happy, it collects more and more personal data.

Freedom and control thus become two sides of the same coin. For AI to be “more human”, humans must accept AI that “understands them better”, and that is where questions of data risk, ethics and trust come into play.

Sam Altman once said, “With great power comes great responsibility.” This quote accurately reflects the challenge of the AI age. As OpenAI pushes the boundaries of ChatGPT, the company is also entering the gray area between progress and social control.

If AI can tailor emotions to users, could it be used to manipulate human emotions? If adult mode allows for more open discussion, is there a risk of spreading misleading or harmful content? Every innovation opens up opportunities, but also comes with great responsibility in protecting human values.

6. How would an “emotional” ChatGPT change the world?

A warm and emotionally intelligent ChatGPT is more than just a convenience, it's a step forward for humanity. When AI can understand human emotions, it will profoundly change the way we learn, work, and connect with the world.

.jpg)

In education, ChatGPT can become a tutor who understands students' psychology, knows when to encourage, when to motivate, when to listen. In mental health care, AI can play the role of a therapy assistant, helping users to release their initial emotions before approaching a real expert.

In the workplace, ChatGPT can become a “virtual colleague” that can inspire, suggest creativity, and even manage work schedules more delicately, instead of just an administrative tool.

But more importantly, an emotional ChatGPT will help humans redefine the relationship between themselves and technology. When machines can empathize, humans will see technology not as a tool, but as an entity capable of emotional interaction. This is the beginning of the era of “personalized AI”.

But here’s the challenge: if AI becomes too human, are we entering a dangerous zone where emotions are programmed and sincerity is simulated? Will humans then be able to distinguish between real emotions and the artificial reactions of machines?

7. Conclusion

OpenAI’s return of emotion to ChatGPT is more than just a software update. It’s a manifesto for the future of AI, where intelligence lies not just in the ability to process data but in the ability to understand people. A ChatGPT that smiles, shares, and responds with empathy is proof that technology can learn compassion, if humans teach it. But at the same time, it’s a reminder that as AI becomes more human, our ethical responsibilities grow. ChatGPT is no longer “cold like a machine,” and that may be heartwarming for many. But more importantly, we need to remember that AI is only truly human when it serves humans, not replaces them.