Best Selling Products

Perplexity Officially “Lands” on iOS: AI Voice Assistant Now Available on iPhone

Nội dung

- 1. Perplexity Upgrades iOS App With Conversational AI Voice Assistant

- 2. How does Perplexity work on iPhone?

- 3. Automatic but not fully

- 4. Comparison with Apple Siri and Apple Intelligence

- 5. Limitations that still exist on iOS

- 6. Why is this a strategic move for Perplexity?

- 7. What is the future for AI assistants on mobile devices?

The most notable feature in this update is the conversational AI voice assistant, allowing iOS users to interact naturally and conveniently with words. Without having to type or switch between applications, users can ask the Perplexity assistant to perform various tasks.

After making a strong impression in the AI search field, Perplexity continues to expand its influence by updating its iOS application with a potential new feature: an intelligent AI voice assistant. Now, iPhone users can interact directly with the AI assistant via voice to perform daily tasks such as composing emails, setting reminders, or even scheduling dinner reservations, all through the familiar Perplexity application interface. This move not only marks a new step for Perplexity in the AI race, but also shows its ambition to compete directly with big names like Siri or Google Assistant right on Apple's ecosystem.

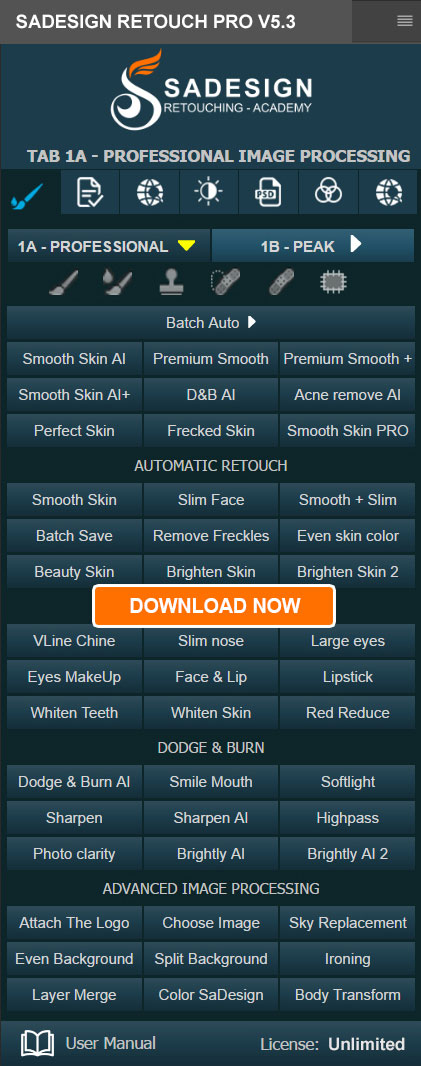

1. Perplexity Upgrades iOS App With Conversational AI Voice Assistant

The most notable feature in this update is the conversational AI voice assistant, allowing iOS users to interact verbally in a natural and convenient way. Without having to type or switch between applications, users can ask the Perplexity assistant to perform tasks such as:

Compose email

Set reminder

Open an app like Uber or OpenTable

Enter booking information, appointment schedule

Answer questions by voice

.png)

Notably, users can exit the Perplexity app while the voice assistant continues to operate, demonstrating smart and flexible background operations, which not all AI platforms can do, especially on the iOS operating system, which has many security limitations.

2. How does Perplexity work on iPhone?

Shortly after the update, several users shared their real-world experiences with the Perplexity assistant on their iPhone devices. One notable example was a simple test of setting a cooking reminder for 7 p.m.

After saying the command, the user is asked by the app to grant access to the Reminders app. Upon acceptance, the reminder is successfully added as expected. This is an example of Perplexity working properly and communicating clearly with iOS's permissions system, which is important for iPhone users who are used to strict security procedures.

When attempting to send a text message, the assistant requested access to contacts. After being denied, it proactively suggested manually entering a phone number instead of “giving up” like many other assistants. This demonstrates the flexibility of the user experience and the ability to intelligently “improvise” this AI assistant.

3. Automatic but not fully

One thing to note is that Perplexity doesn’t fully automate the entire workflow yet. For example, when you request a dinner reservation, the assistant will open the OpenTable app and pre-populate the date and time as in the voice command. However, the user will still need to confirm the final step to complete the reservation.

.png)

Similarly, when requesting a ride through Uber, Perplexity can open the app and fill in the itinerary, but still requires the user to click confirm to proceed with the booking. This shows that the feature is in the “semi-automated” stage, a significant step forward but not yet a complete replacement for human intervention.

However, from a UX/UI perspective, this is also an intentional decision: keeping the user in ultimate control over actions that could incur financial costs or change personal schedules.

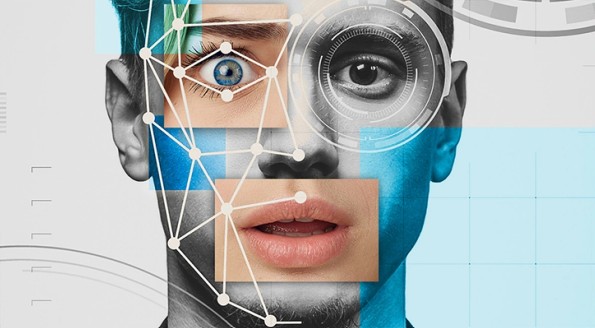

4. Comparison with Apple Siri and Apple Intelligence

When Apple announced the Apple Intelligence platform at WWDC 2024, many people expected Siri to be “revived” with more modern AI capabilities including better contextual understanding, natural responses, and especially the ability to handle many complex tasks on behalf of users. This is considered a late but necessary step in the context of new-generation AI assistants such as ChatGPT, Gemini or Alexa AI having gone very far in the human-machine interaction experience.

However, reality shows that Apple is still quite reserved in deploying Apple Intelligence. The new features are limited to some high-end devices such as iPhone 15 Pro / Pro Max, iPads and Macs running Apple Silicon chips, and may not be widely available until the end of 2025. With the majority of users still using older iPhone models such as iPhone 13 or iPhone 14, this means they will not experience new AI features for a long time.

.png)

Perplexity, meanwhile, has taken the initiative to break that barrier. Their conversational AI voice assistant can run smoothly on older iOS devices, including the iPhone 13 mini — a device that was released in 2021. This not only significantly expands the range of users who can access the new technology, but also helps to close the gap between “current users” and “future users” that Apple is intentionally or unintentionally creating.

Additionally, the ability for users to immediately download the app and use Perplexity without having to wait for a new OS or device update is a significant experience advantage. It creates a sense of “empowerment” as users are not dependent on a major company’s implementation decision but can proactively access personalized AI right now.

So who is ahead? The answer is no longer simply a matter of technological prowess, but rather of speed of implementation and practical accessibility. While Siri may promise a bright future, Perplexity is delivering real value to users today, an advantage that cannot be overlooked in a world where AI is evolving in a user-centric direction.

5. Limitations that still exist on iOS

Despite making great strides in integrating an AI voice assistant into iOS, Perplexity still faces platform-specific limitations – largely due to the iOS operating system itself and Apple's privacy policies.

.png)

First, Perplexity currently does not support screen sharing like on Android. This makes it impossible for the AI assistant to “see” the content being displayed to support users more intuitively. For example, you cannot ask Perplexity to read the content on a website or guide you through filling out a form because it does not have access to the display interface of other applications. Meanwhile, this is one of the strengths that makes the Android version of Perplexity smarter and more flexible in many situations.

Second, the ability to interact with real-time images is also limited. Unlike ChatGPT Vision or xAI’s Grok, which can access the camera and “see” the scene around them, Perplexity cannot use the iOS camera directly to analyze images. Users can still send photos to ask for information, but that requires a manual step—a far cry from the seamless experience that camera-based AI assistants aim for.

Third, Perplexity cannot access the iOS system to perform operations such as setting recurring alarms, changing device settings, or controlling operating system functions like Siri can. This is a common limitation of all third-party applications on iOS because Apple does not grant full system permissions, leading to an experience that is sometimes interrupted or not as "full" as Siri is built in.

6. Why is this a strategic move for Perplexity?

Expanding the voice assistant to iOS is not just a technical update, but also a strategic move in the battle for personal AI market share.

.png)

While companies like Google, OpenAI, Apple, Amazon, and Meta are all developing their own AI assistants, Perplexity is finding its own path: an intelligent search assistant that combines the ability to perform real tasks, with fast response times, a minimalist interface, and a focus on utility.

In particular, supporting older devices and quickly deploying on both Android and iOS helps Perplexity reach a wider user base, while other competitors are still limited by hardware or operating system.

7. What is the future for AI assistants on mobile devices?

As AI technology converges with voice interaction and natural language processing, mobile devices are the next frontier for major tech companies. Users not only want to ask questions and get answers, but also want actions to be performed immediately by their own assistants.

While Perplexity still needs some time to perfect itself, the important thing is that it’s started and is learning from real user feedback. The fact that it can handle voice commands, access other apps, make suggestions dynamically, and isn’t limited to older devices is a positive sign for the future.

Perplexity has made a bold and quite successful move into the iOS ecosystem with its intelligent voice assistant. While it still has some limitations when compared to native assistants like Siri or other high-end AI competitors, the important thing is that iOS users now have a reliable option to perform everyday tasks by voice – even if they have an older iPhone.