Best Selling Products

Google AI Accused of Leading Users to “Dirty” Websites – What Is the Truth?

Nội dung

Recently, some users and technology experts have discovered that when using Google's AI tool to look up software or information, they are not only not directed to reputable addresses, but on the contrary, they are also directed to malicious websites.

Artificial intelligence was once expected to be the key to orienting users in the vast sea of information on the Internet. With the advent of tools like Gemini or AI Overviews, Google does not simply provide keyword-based search results, but also acts as an “intelligent assistant” that can filter, summarize and quickly suggest solutions for users.

However, in practice, that trust is being shaken. Recently, some users and technology experts have discovered that when using Google's AI tool to look up software or information, they are not only not directed to reputable addresses, but on the contrary, they are directed to malicious websites, containing false information, or worse, installing malware.

1. Testing and warnings from experts

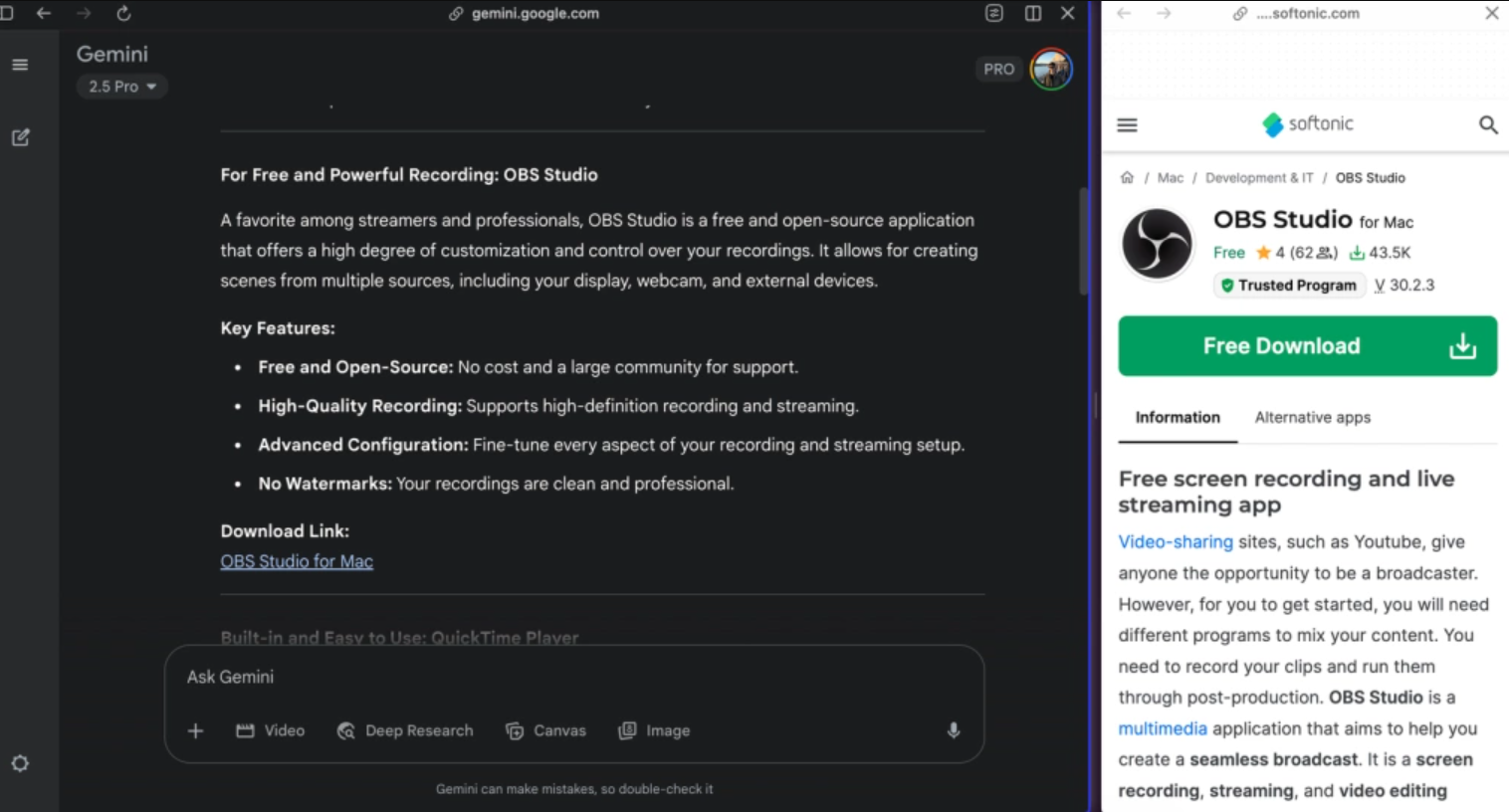

The story begins when Raghav Sethi, a senior writer for the tech site MakeUseOf, decided to test Gemini’s information-directing capabilities. The initial goal was to find some useful and trustworthy software. However, the results he got were startling. Instead of recommending legitimate sources like the software’s home page or official app store, Gemini suggested links to websites that were blacklisted by security experts, typically Softonic.

For those who follow technology, Softonic is no longer a strange name. This website has long been famous for “packaging” free software into separate installers, along with adware, fake search engines or other unwanted programs. Notably, despite many years of warnings from experts, Softonic still regularly appears at the top of search results on Google Search. And now, it is even included in the answers that Google's AI synthesizes and suggests.

According to Sethi, experienced users can easily recognize Softonic and avoid it. However, for older people, students, or those who do not have regular access to technology, the risk of accidentally clicking on a link and installing malware is very high. This is all the more dangerous because AI-generated results are often presented in a visual format, which seems trustworthy and is considered “highly weighted” in the eyes of ordinary users.

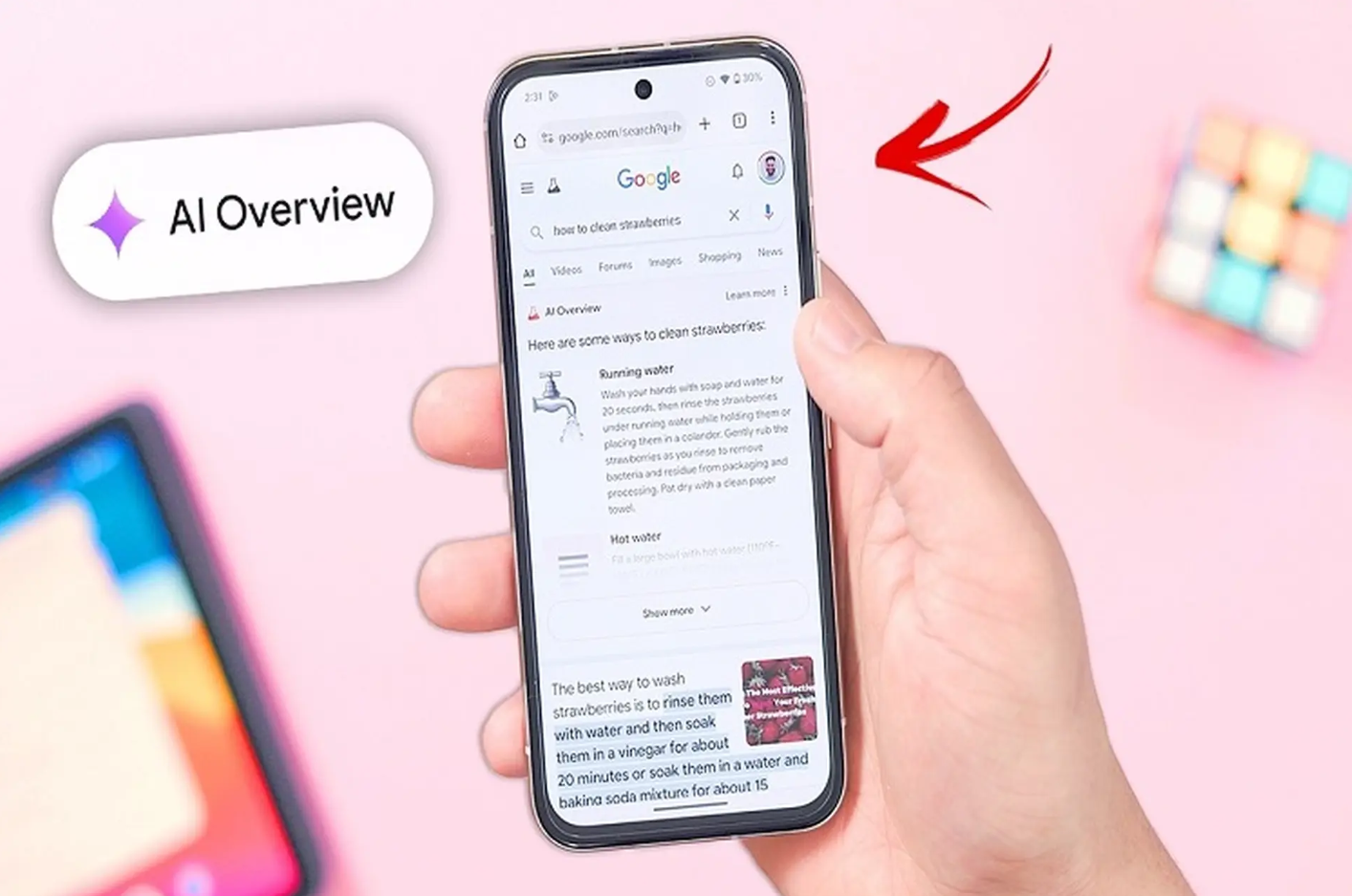

2. A powerful tool called AI Overviews

Not only Gemini, AI Overviews (a feature that summarizes and aggregates answers introduced by Google at the I/O event) was also found to have similar behavior. This feature uses the power of large language models (LLMs), including Gemini, to read and extract content from hundreds of websites, then presents it in a concise and direct paragraph form at the top of the search results.

In theory, AI Overviews should save users time by not having to open each link to find information. But in practice, the tool sometimes acts as “indirect marketing” for shady, untrustworthy, or even outright fake websites.

Experts from MakeUseOf have conducted many tests, in which some cases AI Overviews lead users to websites designed to look like software stores or online services, but when accessed carefully, it is just an outer shell built to extort money or trick users into downloading malware. The ingenuity in interface design and black marketing tricks make it easy for these pages to bypass Google's filtering algorithm, sneak into AI's data warehouse and appear in the quick answer section.

3. Social media is booming

3.1. Users speak up

The problem became more serious when many Internet users discovered similar situations and shared their experiences on social networks such as X (Twitter) or Facebook. One user pointed out that AI Overviews suggested accessing a fake software sharing page with a name similar to a famous brand. However, after downloading, this software was immediately warned by anti-virus software as malware.

Lily Ray posted a series of images documenting the malicious websites that AI Overviews suggested. In a post on X, she commented: “Unbelievable, Google even recommends spam websites as part of the official answer.” The post quickly attracted thousands of comments, many of which expressed concerns about Google’s AI’s ability to self-monitor and censor content.

3.2. Google remains silent

As of this writing, Google has yet to make any official comment on the phenomenon of their AI suggesting unsafe websites, which leaves the user community feeling abandoned, while they once expected Google to be a trustworthy ecosystem.

While industry experts understand that monitoring billions of search results every day is a daunting task, the repeated appearance of malicious websites in AI results raises the question: Is Google really prepared to “give guidance” to artificial intelligence?

4. Other AI platforms have similar problems

Not only Google, some other AI tools such as ChatGPT (in browsing mode), Perplexity or Grok have also been found to suggest inaccurate content or link to low-trust websites. However, the difference is that users tend to trust Google more, because this is the search platform that has been attached to their digital lives for more than two decades.

That trust is further enhanced when AI-generated results are prominently displayed at the top of the page, with Google’s recognizable logo, making users less skeptical than organic links or ads. As a result, when errors occur, the consequences are much more severe than those of less popular platforms.

5. Information checking skills

As AI becomes a ubiquitous tool and gradually replaces humans in navigating information, the ability to verify and authenticate sources of information becomes an essential “digital vaccine”. According to TechRadar’s analysis, the most important thing is not what answers AI provides, but whether users have the ability to independently verify and evaluate.

When searching for software, the first advice is to visit reputable platforms such as the App Store, Google Play or the official website of the software developer. For search engines, instead of clicking on the first link suggested by AI, take a few seconds to carefully read the domain name, identify the organization that owns the website and avoid “strange” domain names such as .xyz, .top or confusing variations.

Similarly, if you are making financial transactions, registering for an account, or accessing sensitive data, you should carefully check the security certificate (SSL), community reviews, and especially the website's activity history on tools like Whois or Internet Archive.

6. Challenges for Google

Google's AI redirecting users to websites containing malware is not just a technical error, but also a wake-up call to a larger issue: the ethical and social responsibilities of artificial intelligence technology.

Google is in a unique position. Not only is it the world’s most popular search engine, it is also the most influential AI platform provider. Every change to Google’s algorithm can impact millions of users, hundreds of thousands of businesses, and shape the way people understand and interact with information.

If AI is deployed without layers of thorough and transparent vetting, mistakes like Softonic’s will be just the tip of the iceberg. The risks extend beyond malware to manipulating public opinion, promoting fake news, or worse, invading users’ privacy and digital assets.

7. What is the solution?

There is no perfect solution, but there are steps that can minimize the risk.

First, Google needs to be more transparent about how AI selects information sources, as well as the criteria for assessing the credibility of websites. The evaluation system should involve human beings, especially security, content and legal experts, instead of leaving the decision entirely to the algorithm.

Second, AI needs to learn to prioritize sources that have proven authenticity, are recognized by the community for their trustworthiness, and have a long history in their field of expertise. A newly created page should not be equated with a page that has been around for years just because it has a temporary spike in traffic.

Third, users themselves need to change their habits when using AI. Instead of “leaving” all decisions to AI, consider it a reference tool, not an absolute source of truth. Taking a minute to verify the source of information can help avoid hours of troubleshooting after clicking on a malicious link.

AI is a powerful tool, but it is also a double-edged sword. The convenience it brings must be accompanied by awareness and responsibility from both developers and users. The incident that Gemini and AI Overviews encountered when “directing users to spam websites” is not only a technical lesson, but also a reminder that in the digital world, trust is the easiest asset to lose but the hardest to regain.

Google and other tech companies need to act quickly and decisively to restore that trust. And users, more than ever, need to learn to walk with AI, not as blind followers, but as intelligent, alert, and opinionated companions.