Best Selling Products

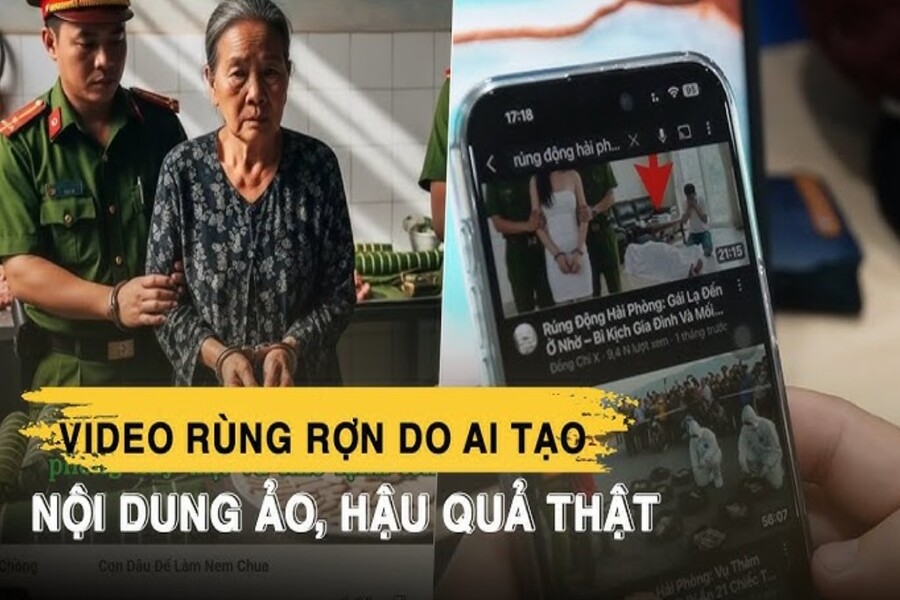

Shudder With AI-Created Videos: Views Based On Reality

Nội dung

It can be said that the most dangerous thing is not whether these videos are real or fake, but that they deceive people's true emotions.

In just the past few months, a new wave has been quietly reshaping the face of digital content on YouTube in Vietnam: videos created by artificial intelligence (AI) with a sensational, dark, and creepy motif. They appear like mushrooms after the rain, taking over all recommended platforms, creeping into users' playlists. Unlike well-invested documentaries or reality TV shows, these types of videos usually only last a few minutes, but are enough to subtly sow doubt, fear, and insecurity.

What they have in common is a boringly repetitive content structure that is still appealing to the masses: a monotonous, emotionless voice telling fictional murder cases, full of gory details and mind-bending plot twists such as jealousy, incest, murder and body disposal, and mass murder. It seems like this is just a specific entertainment genre, but when you delve deeper into each video, what makes people shudder is that these stories are often closely tied to specific locations, even imitating local specialties.

1. Take place names and specialties and then attach them to negative content to attract views.

Thanh Hoa, Nghe An, Hai Phong, Da Lat, Quang Ngai, Tay Ninh... a series of provinces and cities suddenly became the setting for bloody crimes in the imagination. What shocked the public was that the illustrations in the videos were created with extremely realistic AI: from familiar city scenes, restaurant signs to the faces and gestures of the "characters", all had a vividness that was difficult to distinguish from real life. Some videos even used images of specialty dishes such as fish noodles, sour sausage, eel soup, Trang Bang rice noodles as "props" to increase the level of authenticity and create a feeling of horror and contrast.

The combination of realistic images and cold narration seems to distort the image of these lands in the viewer’s mind. Although the producer emphasizes that this is a “work of fiction” or “AI-generated images”, the repetition of harmful content, attached to specific locations, gradually creates a potential confusion.

.png)

Just a few minutes of reading the comments below each video shows a range of reactions of concern, skepticism, and even fear from viewers. Many people confessed that after watching a video about a gruesome murder at a fish noodle shop in Hai Phong, they could not eat this dish for many days. Others said they “chilled” when they heard about eel soup, because the image in the video reminded them of a body hidden in a pot of boiling water.

This influence is invisible but extremely harmful. Not only does it damage the local image in the eyes of the online community, it can also negatively affect the tourism and culinary services industries, which are slowly recovering from the pandemic. For localities that are trying to build a cultural and culinary brand or develop local tourism, videos like this are no different from an invisible but effective smear campaign.

The problem does not stop at "cheap view-baiting", but also reflects a more dangerous reality: artificial intelligence is being exploited indiscriminately by unethical content creators, willing to use the reputation, history, and specialties of a land as tools for profit, regardless of the damage caused to the community.

2. Virtual content, real harm

Arguably, the most dangerous thing is not whether these videos are real or fake, but that they deceive people's true emotions. In a world where AI-generated images can almost perfectly reproduce every expression, action, and scene, the line between fiction and reality is becoming increasingly blurred.

When a person is constantly exposed to videos that are criminal, gruesome, and bloody, they are likely to become emotionally numb. Young people in particular - who are in the process of forming their worldview and value system - tend to be easily influenced by content that stimulates the nervous system. At first, it may be just curiosity, but gradually it becomes an addiction to strong sensations, then a tendency to seek out increasingly horrifying content. This has been proven by many psychological studies, showing that the repetition of violent images can increase the threshold of stimulation and make viewers become indifferent and insensitive to the pain of others.

.jpg)

Not stopping there, when details such as murder due to adultery, disposing of a loved one's body, and incest within the family are presented as a "work of art", with elaborate scenes and soft voiceovers, viewers will be morally deviant without even realizing it. They may think this is "normal", or worse, lose the ability to distinguish right from wrong in social relationships. For a segment of young people with an unstable educational foundation, the long-term consequences can lead to skepticism about traditional values, loss of faith in family, community, and even themselves.

There is no denying that AI is a powerful tool for supporting creativity, but when this tool falls into the hands of individuals who are only interested in profit and lack responsibility to the community, a fake content is enough to destroy real trust. In fact, there have been cases where viewers have sought out local authorities to “verify the case” just because they believed the video was real. This confusion not only wastes social resources, but also puts pressure on information and communication management agencies.

Some psychologists have even warned that if this trend continues to spread, society will face a “negative information pandemic”, where the emotion of fear is abused to manipulate consumer behavior, social consciousness and even belief in reality.

So who is responsible? It is difficult to trace when the video creator always includes the words “fiction” or “not real”. But clearly, when such content is rampant on the internet, the responsibility lies not only with the content creator, but also with the distribution platforms and the viewers themselves.

.png)

Platforms like YouTube need to have more contextual algorithms to limit the recommendation of negative content, especially when it is unethical or hurts a particular community. Meanwhile, the viewer community also needs to learn to be sober and responsible for their own feelings and the feelings of others. Boycotting, reporting, or blocking channels that specialize in posting harmful content are small actions that will have a positive effect in the long run.

3. When AI becomes an “emotional weapon”

Negative content is nothing new. Sensational media, tabloids, and horror movies have been around for decades. What’s different, however, is that AI has lowered the barrier to creation, allowing anyone to become a “producer” in minutes, without the need for editing, scripting, or directing skills. With just a few simple prompts, an AI-powered script, and automated video editing software, an anonymous person can produce dozens of “murder” and “haunted” videos every day.

AI’s ability to create realistic images, especially models like Midjourney, DALL·E, or Stable Diffusion, has reached a level where the naked eye has difficulty distinguishing between real and fake. When those images are placed in familiar life contexts such as bakeries, pho restaurants, or crowded residential areas, viewers can easily be fooled into thinking that these are real photos, real people, and real events.

This puts AI at risk of becoming an emotional weapon if misused. When imagination is combined with massive training data, “molded” by unethical individuals, the end result is not a work of art, but a toxic product that causes psychological damage, distorts perceptions, and manipulates public opinion.

4. An ethical fence is needed for AI-generated content

No one denies creative freedom. But like all freedoms, it must be accompanied by ethical responsibility. In a world where AI can recreate anything, truth becomes the most easily manipulated thing.

.png)

The content creation community needs a set of digital ethics, built on consensus between AI developers, distribution platforms, and the user community. These rules should go beyond labeling “fiction,” but also include mechanisms to verify the origin of images and evaluate content based on community impact criteria, especially when there are fabricated elements related to places, culture, cuisine, and religion.

At the same time, raising user awareness is also vital. In an age where anyone can be a victim of emotional manipulation, the skills of analyzing content, assessing authenticity, and protecting mental health need to be considered part of modern digital education.

5. Conclusion

AI-generated creepy videos are more than just a passing entertainment trend. They are changing the way we perceive the world, see our communities, and evaluate our relationships. Under the guise of creativity, high-tech, and modern technology, there is content that is breaking the truth, bending morality, and hurting the community.

In such a context, the responsibility lies not only with the content creators, but also with each of us, the information consumers. When we click the view button, like button or share button, we are helping to spread a type of “spiritual virus”. Only when each individual knows how to stop, reflect and choose wisely, can the digital space become a place to spread knowledge, positive emotions and kindness as it was originally intended.