Best Selling Products

ChatGPT and the dark side of transmitting scientific knowledge

Nội dung

A study from the Science Press Package (SciPak) team at the journal Science found that ChatGPT, while capable of summarizing and simplifying text, often “sacrifices accuracy for understandability.”

In the past few years, artificial intelligence has become the focus of most discussions about the future of technology. The emergence of advanced language tools such as ChatGPT, Claude or Gemini has not only changed the way we write and create, but also opened up access to information in a completely new way. ChatGPT can write an essay in seconds, create engaging media content or answer complex questions in natural language. For ordinary users, these tools are like an “intelligent assistant” that is always ready to help.

However, when it comes to highly specialized fields such as science, where accuracy and sophistication in expression are more important than any other factor, the emergence of ChatGPT raises many concerns. Science is a serious process, requiring verification, rigorous methods and specialized language. A small deviation in expression can lead to major misunderstandings in public perception. And in the context of society facing a crisis of trust in information, the participation of AI in scientific communication becomes even more sensitive.

A study by the Science Press Package (SciPak) team at the journal Science found that ChatGPT, while capable of summarizing and simplifying text, often “sacrifices accuracy for readability.” This raises a big question: can we rely on AI to communicate scientific findings to the public, or is it just a stopgap tool that requires rigorous human review?

1. Science and mathematics communicated to the public

Science is inherently a field that is difficult for the general public to access. A research paper published in an academic journal is often dozens of pages long, containing countless terms, formulas, tables and data. Scientific writing is characterized by its dry style, focusing on evidence and methods rather than storytelling. This makes it tiring to read even for people with advanced degrees in other fields.

That is why science communication appears as a bridge. Science journalists, specialized editors or those who create popular knowledge content play the role of “translating” the language of science into stories that are close, easy to understand while still maintaining the spirit and accuracy. They explain why a discovery is important, how it affects daily life, what limitations to note and what direction further research should take. This job requires specialized knowledge, language skills and above all, a sense of responsibility for the truth.

However, when AI like ChatGPT enters the picture, many people think that the role of science journalists can be replaced. Because with just a simple request, ChatGPT can provide a smooth, concise, and easy-to-understand summary. But the problem is: easy to understand is not necessarily correct. Once scientific content is too truncated, exaggerated, or misinterpreted, the public not only loses the opportunity to properly understand the research, but also forms a distorted perception that affects how they act in reality.

2. Real-world testing: ChatGPT in the Science press room

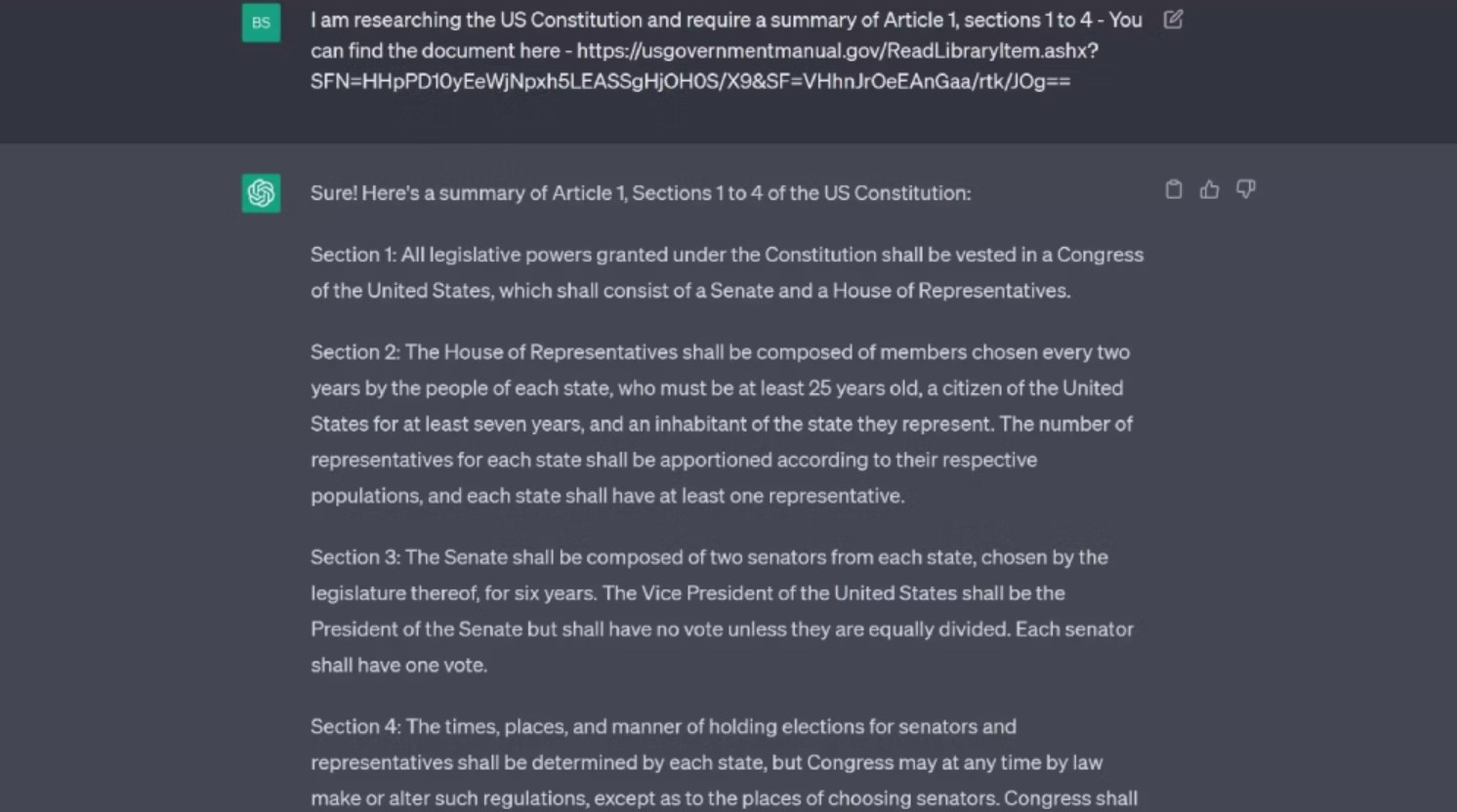

To test ChatGPT's ability to communicate science, the SciPak team conducted a systematic experiment. They used the ChatGPT Plus version for a year, asking the tool to summarize several scientific papers each week. The results were compared with the summaries of expert editors and evaluated by the authors of the studies themselves.

What they found was remarkable. ChatGPT was able to produce summaries that flowed, were written in a natural, easy-to-read style, and made the science more accessible to the general reader. However, the price was accuracy. In many cases, ChatGPT omitted or misinterpreted key details. Important nuances, such as experimental conditions, limiting factors, or small but meaningful differences in data, were simplified.

In particular, ChatGPT tends to overuse hyperbolic words like “breakthrough”, “revolutionary”, “game-changing”. To the average reader, this creates the impression that science is constantly making great strides, when in reality, most research is just small steps in a long process. When public expectations are over-inflated, they are quickly disappointed when they do not see immediate practical applications, leading to skepticism towards science.

The SciPak team’s report notes that ChatGPT frequently fails to clearly state the limitations of its research. This is a serious omission, because in science, “limitations” indicate what the research cannot yet confirm, what flaws exist in the method, and how the results should be interpreted in context. Without this, the public is likely to believe that the research has completely solved a problem when in fact it is only a beginning.

Abigail Eisenstadt, a senior writer at SciPak, has been outspoken in her belief that relying solely on ChatGPT could undermine public trust in science. When the public discovers that the information they receive from AI isn’t entirely accurate, they’ll become skeptical not just of the tool, but of the entire field of scientific research.

3. Why is ChatGPT prone to falsifying science?

To understand why, we need to look at how ChatGPT works. ChatGPT doesn’t “understand” science like a researcher. It doesn’t have the ability to analyze data, test hypotheses, or critique results. Instead, it just predicts the next sequence of words based on statistical probability from the huge database it’s been trained on.

As a result, the texts generated by ChatGPT may be smooth but empty of scientific content. It prioritizes readability over accuracy, because the goal of a language model is to please users, not to adhere strictly to academic standards. This is why ChatGPT summaries often cut out important details or replace them with general, pleasant-sounding but valueless expressions.

Another factor is the tendency to exaggerate. ChatGPT was trained on data from the internet, where newspapers, social media, and blogs frequently use strong language to attract readers. As a result, the AI learned this habit and unconsciously repeated it when writing about science. While this makes the text more appealing, it seriously distorts how the public understands the importance of the research.

Finally, ChatGPT lacks the ability to articulate the limits of a problem. While a science journalist would always ask: “What does this study not solve? What further steps are needed to make sure?”, ChatGPT simply stops at reporting the results. It is this lack of critical thinking that makes it difficult for AI to replace humans in science communication.

4. Risk to public trust

The biggest consequence of the public accessing science through ChatGPT without verification is the risk of misunderstanding and loss of trust. When people constantly hear about “breakthroughs,” they expect scientific discoveries to quickly translate into products, medicines, or environmental solutions. But when that doesn’t happen, disappointment sets in. Over time, trust in science is eroded.

This risk is exacerbated in sensitive areas like health and climate. If ChatGPT exaggerates a vaccine study, the public might believe a new drug is ready to save millions of lives when it is actually only in animal testing. Or if AI oversimplifies a study on climate change, readers might think the problem is solved, thus ignoring the necessary action.

Trust in science is already being challenged by a wave of misinformation on the internet. If AI contributes to the spread of misinformation, even unintentionally, its impact could be even more severe than that of regular fake news, because AI-generated content often sounds more polished and credible. This is why experts insist that we cannot entrust all the responsibility of science communication to a machine.

No matter how much technology advances, the human element remains central to science communication. Journalists, editors, and experts are not just “storytellers,” but also the guardians of the integrity of science as it is communicated beyond the academic community.

A good science journalist will know how to ask questions like: “What makes this study important?”, “What is new compared to previous studies?”, “What are the limitations that readers need to understand?”. They will also be able to compare the results to the social, political, and economic context so that the public can see the practical significance. This is something ChatGPT cannot do.

In addition, humans have the intuition and experience to recognize when information needs to be highlighted and when it needs to be reduced to avoid misunderstanding. This sensitivity cannot be programmed perfectly. Therefore, although AI can assist, the role of humans in science communication is irreplaceable.

5. ChatGPT and its supporting role rather than replacement

That doesn’t mean ChatGPT is completely useless in this area. If used properly, AI can be a useful tool. It can help journalists save time reading initial summaries, quickly translate difficult passages, or suggest different ways to express the same idea. With the huge amount of scientific research published every day, this is no small help.

However, the core part of deciding what information is important, how to interpret it, and ensuring it is not misleading still has to be done by humans. ChatGPT can write a “draft”, but that draft needs to be checked, edited, and perfected by an editor or journalist. When placed in the position of a support tool, AI will show its strengths without posing too much risk.

6. Conclusion

Science communication has always been a challenge, requiring a balance between accuracy and accessibility. ChatGPT, with its smooth and readable text, could offer new opportunities for popularizing science. But the SciPak team’s research has shown that the price of readability is accuracy. And in science, even a small error can have major consequences for social perception.

So we need to be very careful. ChatGPT can be a companion to science journalists, but it cannot replace them. Only when humans take the central role, ensuring honesty and sophistication in every word, can science be properly communicated to the community. In the AI era, the challenge is not to eliminate technology, but to learn to use it responsibly, so that science is understandable, honest, and trustworthy.